Transparency in AI: What Marketers Need to Disclose

This blog explores the critical role of transparency in AI-powered marketing. It outlines what marketers must disclose to consumers—such as data usage, personalisation logic, and automated decisions—to build trust, ensure compliance, and promote ethical AI practices. Drawing from real-world examples and responsible AI frameworks, the post offers actionable steps for marketers to adopt transparent and explainable AI strategies across campaigns and platforms.

RESPONSIBLE AI

Toshak Kadam

7/14/20255 min read

Introduction

In today’s fast-evolving digital landscape, marketers are increasingly turning to Artificial Intelligence (AI) to personalise experiences, automate decision-making, and optimise campaigns. From predictive analytics to AI-generated content and dynamic pricing, the use of AI has become nearly inseparable from modern marketing strategy.

Yet, as AI capabilities grow, so does the responsibility marketers bear to use these technologies ethically and transparently. Consumers are not only becoming more tech-savvy, but they’re also demanding greater clarity on how their data is being used and how decisions that affect their lives are being made.

Transparency in AI is not just a technical issue—it’s a trust issue.

In this post, we explore what transparency means in the context of AI-powered marketing, why it’s critical, what marketers should disclose, and how they can embed responsible AI principles into their strategies.

Why Transparency in AI Matters

Transparency in AI refers to the clarity and openness with which AI systems operate, specifically how they gather data, make decisions, and influence outcomes. In marketing, this affects how consumers perceive your brand and how regulators evaluate your practices.

Building Consumer Trust

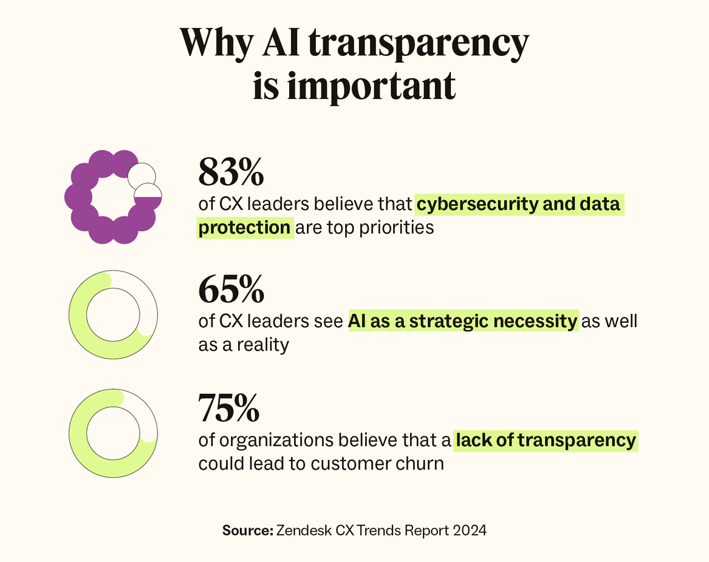

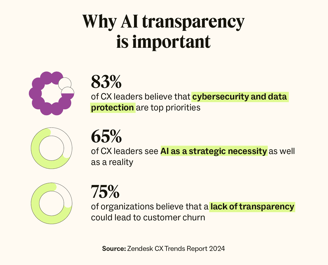

AI can be opaque. If consumers feel manipulated or deceived by AI-driven personalisation, they may lose trust in the brand. According to an IBM survey, over 75% of consumers say they won’t buy from a brand they don’t trust with their data.

2. Navigating Ethical and Legal Risks

With growing regulatory scrutiny (like GDPR, CPRA, and India’s DPDP Act), brands must be proactive in explaining how AI impacts user data, behaviour, and privacy. Non-compliance isn’t just unethical—it can result in significant fines and reputational damage.

3. Avoiding Algorithmic Bias

Lack of transparency can mask biases in your AI models. Biases in ad targeting, hiring recommendations, or dynamic pricing models can lead to discrimination, backlash, or even legal action.

What Marketers Must Disclose: The Pillars of AI Transparency

Transparency is not about revealing proprietary algorithms. It’s about being transparent, fair, and informative with consumers, clients, and stakeholders.

1. Data Collection & Usage

Marketers must be clear about:

What data is being collected: Is it behavioural, demographic, psychographic, or biometric?

How it’s collected: Is it via cookies, app usage, third-party trackers?

Why it’s collected: Is it for personalisation, segmentation, retargeting?

🟢 Best Practice: Adopt “Explainable AI” practices in consumer-facing interfaces. Include clear messaging like:

e.g., “We use your browsing history to tailor your shopping experience”

2. Personalisation Logic

Consumers need to know:

How AI tailors content, ads, or recommendations.

Whether personalisation is based on behaviour, preferences, or inferred traits.

🟢 Best Practice: Use tooltips or “Why am I seeing this?” explanations for AI-generated content or recommendations,

e.g., Netflix’s “Because you watched…” or Google Ads’ ad disclosure badges

3. Automated Decision-Making

If AI is involved in decisions that significantly affect users (like pricing, approvals, content filtering, or access), disclose that:

A decision was made or assisted by AI

The logic or criteria used

The user’s right to contest or appeal

🟢 Best Practice: Adopt “Explainable AI” practices in consumer-facing interfaces. Include clear messaging like:

“This loan approval was determined using an automated system. Learn more about how it works or request a manual review.”

4. Use of Synthetic or AI-Generated Content

If AI is used to create marketing content, chat responses, or influencer avatars, disclose that:

The content was generated by an AI tool

Human oversight or editorial input was included

🟢 Best Practice: Label AI-generated content, especially in social media, chatbots, or customer service.

A simple line, such as “Generated using AI with human review,” adds credibility.

5. Third-Party Tools and Model Sources

When relying on third-party AI tools (like GPT-based chatbots, recommendation engines, or ad tech platforms):

Disclose the use of external tools

Disclose their privacy and data handling policies

🟢 Best Practice: Maintain a Public AI Usage Disclosure Statement listing the AI tools used, the purposes they serve, and how they impact user interaction.

Industry Examples: Who’s Getting It Right?

🔹 Spotify

Spotify’s algorithmic playlists, like Discover Weekly, come with subtle cues about how they’re generated, building trust while keeping the user experience seamless.

When LinkedIn suggests posts or recommends connections, it provides clear justifications, such as “Based on your profile and activity”—a form of low-friction, meaningful transparency.

🔹 Google Ads

Google has introduced “About this ad” labels, which explain why a user is seeing an ad and how to manage personalisation settings.

🔹 H&M

H&M disclosed the use of AI in demand forecasting and supply chain management, which is not directly customer-facing, but is valuable for stakeholder trust and sustainability narratives.

Responsible AI Framework for Marketers

Based on your dissertation, here’s a simplified framework marketers can use to embed transparency into AI systems:

1. Purpose Clarity

Define why you’re using AI.

Ensure it serves a legitimate marketing or customer benefit.

2. Disclosure Strategy

Build AI Disclosure Templates for campaigns, tools, and platforms.

Maintain user-friendly language across all disclosures.

3. Human Oversight

Ensure AI outputs go through human review, especially for sensitive content.

Train teams to spot hallucinations, biases, or false personalisation.

4. Ethical Impact Review

Run ethical risk assessments before launching AI-enabled campaigns.

Utilise AI explainability tools such as SHAP, LIME, or AI Fairness 360.

5. Feedback Loops

Provide consumers with channels to contest AI decisions, give feedback, or opt out.

Monitor AI system performance and user sentiment regularly.

Future Trends: Where AI Transparency is Headed

✅ Algorithmic Audits: Expect organisations to undergo regular AI audits—either by regulators or independent agencies—to assess bias, fairness, and explainability.

✅ Standardised Disclosure Labels: Similar to “Nutrition Labels” on food, expect AI-powered experiences to come with clear AI Disclosure Badges or certifications.

✅ Rise of AI Literacy: As digital users become more aware of AI's role in marketing, transparency will no longer be optional—it will become a competitive differentiator.

Conclusion: Transparency is the New Loyalty

In a world where AI determines what we see, buy, believe, and trust, marketers must lead the charge in using AI responsibly. Transparency isn’t about exposing trade secrets—it’s about respecting user agency, upholding ethical standards, and earning long-term loyalty.

As you embrace AI in your marketing stack, ask yourself:

Would I be comfortable if a customer were to see how this algorithm works?

Can I explain it simply to a non-technical audience?

Have I given users a way to opt out, contest, or understand the decision?

If the answer is yes, you’re not just using AI—you’re using it responsibly.

Action Steps for Marketers

✅ Audit your current AI tools and campaigns for transparency gaps.

✅ Develop a standard AI disclosure policy across your marketing stack.

✅ Include Explainability and Ethical Impact as part of every campaign planning.

✅ Educate your team and stakeholders on Responsible AI principles.

✅ Keep learning—this is just the beginning.

Challenges to Implementing Transparency

Despite best intentions, marketers face barriers:

🚧 Technical Complexity

Not all AI systems are easy to explain. Black-box models (like deep learning) can be inscrutable even to their creators.

🚧 Risk of Oversharing

Revealing too much about your algorithmic logic might invite gaming or compromise IP.

🚧 Organizational Silos

Marketing, tech, legal, and compliance teams often operate independently, making coordinated transparency efforts difficult.

🚧 Consumer Apathy or Overload

Even when disclosures are made, users may not read them. The goal should be to design for clarity and simplicity, not verbosity.